This blog is the third of a series of blogs that I will be writing as I continue to upskill in DevOps. I am excited to share my journey with you and hope you find these posts informative and helpful.

In the last blog, we discussed how to create an image of our application using the Dockerfile. In this post, we will dive into playing around with what we discussed in the previous post using a sample project.

Project Setup

First, Let us create a basic node server to get started. Follow along the steps here if you don't have experience working with nodeJs.

Download and install node and npm if you already don't have them from here.

Create a new directory where ever you'd like in your system using

mkdir <directory-name>replace the directory name here with the name you want it to have.Fire up the terminal and change to the current directory and give in

npm init --yesCreate a file named

index.jsand paste the following code into the file.const express = require("express"); const app = express(); app.get("/", (req, res) => { res.send("Hello World!"); }); app.listen(3000, () => { console.log("Example app listening on port 3000!"); });Open the

package.jsonfile and add a new section calleddependenciesan add this:"scripts": { "start": "node index.js" }, "dependencies": { "express": "*" }Now, lets try containerising this project, in the same way we learnt in the last blog. create a Dockerfile and add these lines

# specify the base image FROM alpine # Install dependencies RUN npm install # startup command CMD ["npm", "start"]From what we discussed in the previous blog. this should work completely fine when we try to generate an image using this Dockerfile using

docker build .but that is not the case here and this is expected, why do you think we are experiencing this?

Hmm, if you remember for the previous blog that image consists of a two parts, with one being the startup command which is used to execute when we use the image to start a container and the second one being the filesystem snapshot. This is the exact reason why we get an error while trying to create the image, because alpine image by default does not have node and npm installed in it. so when we say

npm installit doesn't really know what npm is. There are two ways to solve this:Run the Image and manually install node and npm inside the container

Use a different base Image (which already has node and npm installed).

While we can choose to do anything in between these two to get it to working the 2nd Option is a standard way of dealing with such scenarios.

So we update our Dockerfile to use a base Image which already has node and npm already installed.

You can proceed to Docker Hub and find an official node image. This is not exactly a node image but it is an Image that has node installed in file system snapshot readily available for our convenience. so now the update Dockerfile Looks like this.

# specify the base image

FROM node:14-alpine

# Install dependencies

RUN npm install

# startup command

CMD ["npm", "start"]

14-alpine is just a version tag here. alpine is added just to mean that it is really a stripped down version of the image, meaning that it only has the tools/commands that are absolutely required just to keep it light-weight. When we try to create an image now using docker build . command again. it still throws an error saying it didn't find the package.json file to open and see what dependencies to install. This is expected too because we have the package.json in our local machine and not in the container. we discusses in the part-1 introduction to docker blog that the container is an individual and completely isolated segment of the hard drive. so if we want this to work perfectly we need to make the build files available in the docker container. to do this we can use Docker's COPY instruction.

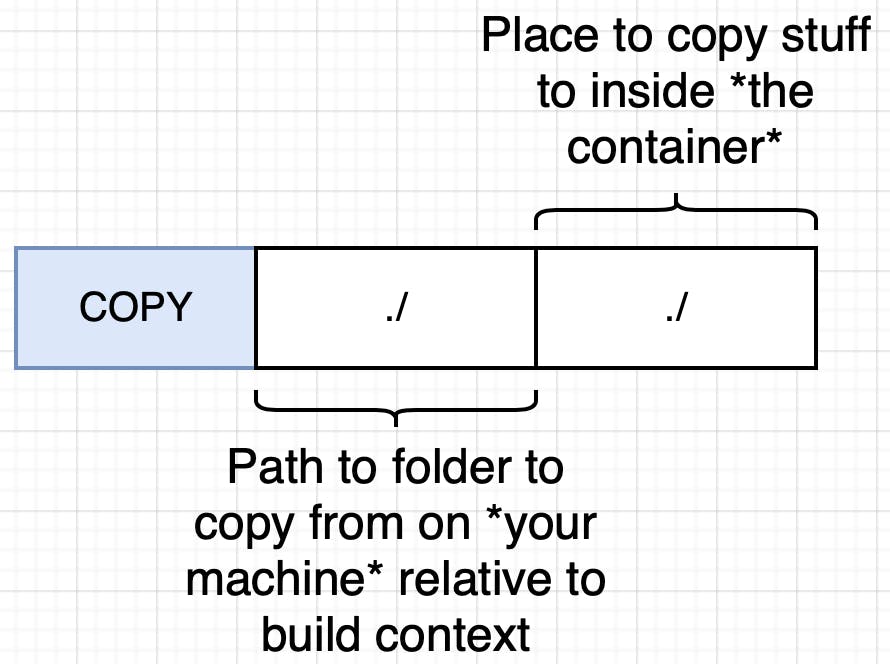

COPY ./ ./

So here we are trying to use COPY instruction to copy files from the local machines relative to the build context into the container.

So Now lets modify the Dockerfile accordingly. This is how it will look like after the new changes:

# specify the base image

FROM node:14-alpine

# copy from the current build context into the container

COPY ./ ./

# install a few dependencies

RUN npm install

# Run the default commands

CMD ["npm", "start"]

Now everything looks good, Let's try starting the container and check if its working fine.

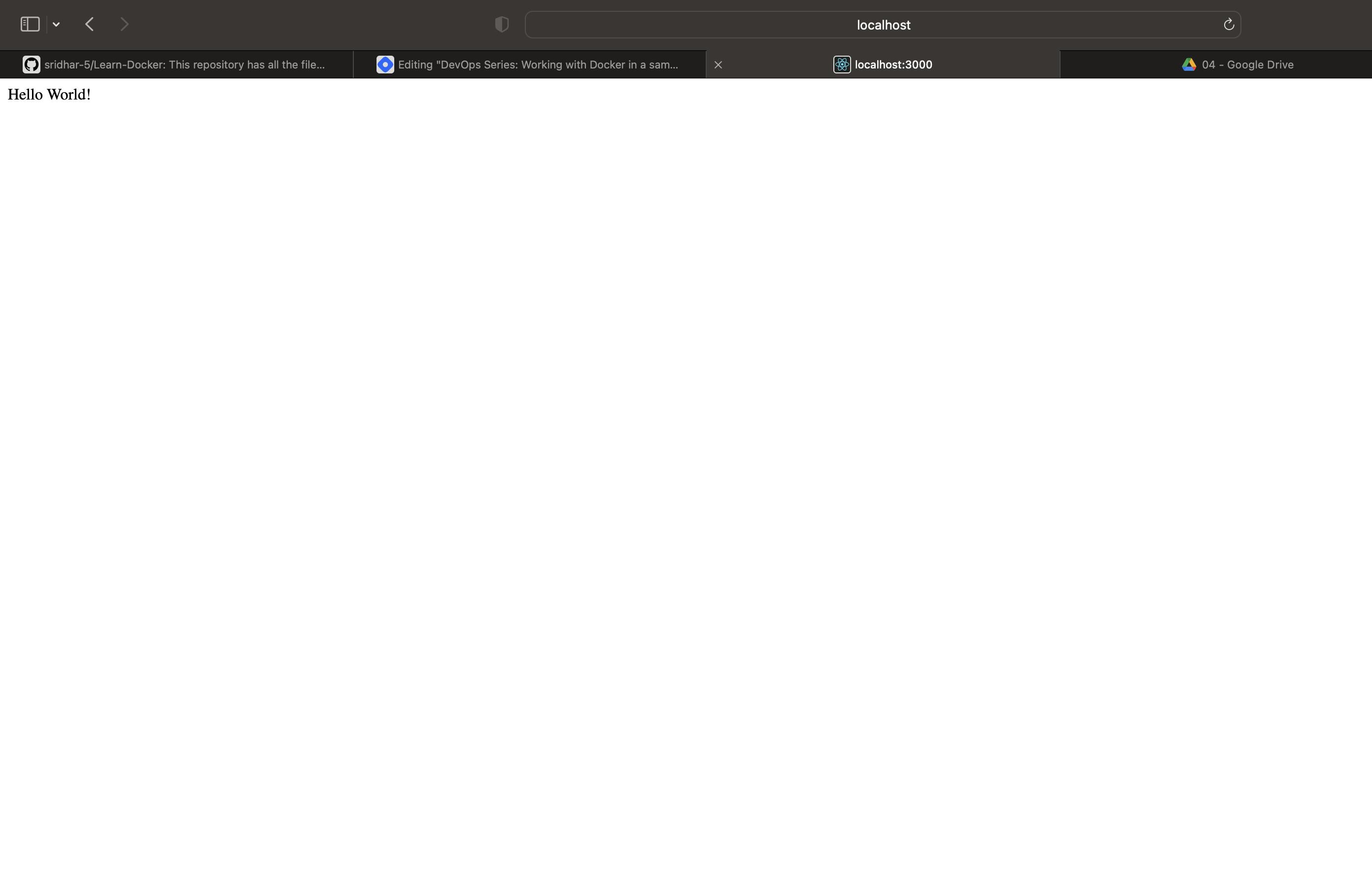

Huh..! The server appears to have started, let's quickly fire up the browser and see what is up there. Ideally we should see a "Hello World" plain text when we browse for localhost:3000, let's see what show's up now.

Hmm, Seems strange right..! The server appears to be running in the background but still the 'Hello World' message isn't showing up in the browser. Why is that?

The Problem

The reason we are still seeing the Can't connect to the server error, is because the request that we are making or trying to make is routed to the local network's 3000 port. A point to note here is that "no traffic" that is coming into the localhost is routed to the container by default, the container has a few isolated sets of ports that can receive traffic.

The Solution

so we have to explicitly set the port mapping ( so what we are doing here is at any point if we receive a request then we are automatically forwarding the request to a specified port in the container.) This is not something we set inside the Dockerfile This is something we do at runtime.

docker run -p 3000:3000 <image-id>

Here, by mentioning 8080:8080, basically we are specifying that, route all the incoming requests on port <incoming-request-port>:<into-the-isolated-container-port>, once the port is mapped it solves the Can't connect to the server problem and you can see a "Hello world" message when you browse for localhost:3000.

Setting up WORKDIR in the container

If you run the container shell and list all the files that are currently there in the root. we can see that all our project files are at the root.

Now that is bad, because there is a very good chance that we have a directory called lib in our project, when we are trying to copy the lib directory from our local machine to the container, we might over-write the already existing content in that directory which might break some functionality. To avoid this Risk/ Bad practice, there is special instruction in docker known as WORKDIR. this instruction takes in a path

WORKDIR <path-to-copy-project-files-to-inside-container>

if the particular directory is not present in the container, it will create one. Once we set the WORKDIR instruction in Dockerfile. We can totally replace the path in the copy command to achieve the same thing we are doing through WORKDIR instruction)

so the updated docker file would now look like

# specify the base image

FROM node:14-alpine

# setup a workdir

WORKDIR /usr/app

# copy from the current build context into the container

COPY ./ ./

# install a few dependencies

RUN npm install

# Run the default commands

CMD ["npm", "start"]

Unnecessary rebuilds problem

imagine that, you don't want to send the "Hello World" text anymore. you want to send something else like "Hi there". Let's go ahead and make that change and save the file and see what happens in the browser.

Woah..! Nothing changed. why is that? because the file you've made changes to is in your local file system and not the container's file system. So till the point, you don't update the index.js file in the container. you are going to see the same output in the browser (basically rebuild because copy step is also there in the Dockerfile we created earlier). Rebuilding the image solves the issue we are facing now, but is it ideal to rebuild the entire image and installing all the dependencies again? Just because we want to update the string we are getting in response? The Obvious answer is No. So how do we avoid re-installing all the dependencies over and over again when we update a single string? we can slightly reorder and modify the Dockerfile to avoid re-installing the dependencies repeatedly by making the following changes to it:

# specify the base image

FROM node:14-alpine

# setup a workdir

WORKDIR /usr/app

# install a few dependencies

COPY ./package.json ./

RUN npm install

COPY ./ ./

# Run the default commands

CMD ["npm", "start"]

Here we are using the fact that the npm install command only needs package.json file to execute smoothly. so we copy the package.json file into the working directory first and install dependencies and then copy the index.js file. so the next time we update the string in the index.js file only the steps below the COPY ./ ./ will re-run again and the rest of the steps are not invalidated by the cache.

Credit to the instructor of the "Docker and Kubernetes: The Complete Guide" course on Udemy for all the pictures and his amazing explanation.

I hope this blog has given you some further insights on Docker. I'm still learning and trying to upskill myself in DevOps. So if you find anything wrong with my understanding above, please leave a comment below (Always curious to learn) and if you have any questions or comments, please feel free to leave them below. Thank you for reading. Will see you in the next one. Stay Tuned. Peace..✌️